|

| Start Up, not Down. |

The 80's Were Ok, I Guess

As a child of the 80's, I was raised with a lot of mixed messages. These messages took a lot of bizarre forms. I distinctly remember Poison's "Open Up and Say Ahh" being re-released solely because parental groups were concerned that the devilish cover was somehow hypnotizing teens into a riotous hormonal rage. It surprised me, even at the tender age of nine, that somehow covering up the image except for the eyes would appease these groups that supposedly cared about decency.

|

| Hair Metal Hijab! |

|

| George Carlin was more controversial |

Weren't the lyrics and hair-metal themes far more of a concern than ridiculous cover art? Either way, I certainly didn't care because it meant that I was finally able to purchase my own copy of the album. And boy, was I thrilled to tear open that cassette packaging - completely ignoring the cover art - to settle into less than an hour of sonic distress that I would soon toss into a pile of tapes and forget.

|

| Much Better Music |

Regardless, this was one of the earliest instances I can recall where adults made insincere compromises in the name of safety (and, I guess, decency). Let's not forget that only three years earlier, Tipper Gore founded

Parents Music Resource Center. The PMRC advocated against music that glamorized sex, drugs, and violence (by which I guess they mean

Rock and Roll). What they didn't tell us is that Tipper was (at the time) a closet dead head that went

on tour in her youth. I bet she and Bill had many a nostalgic night reminiscing about

never inhaling.

|

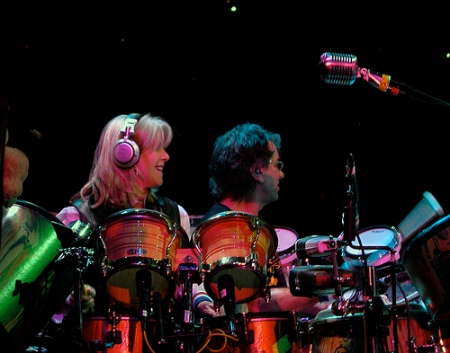

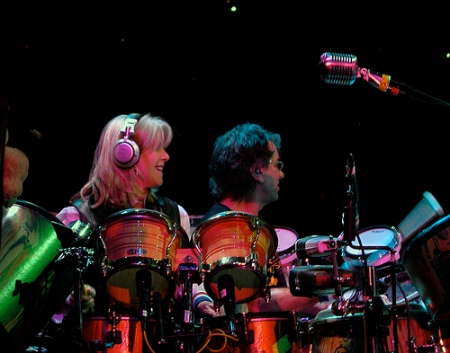

| Tipper performing Sugar Magnolia with The Dead 4/20/09 |

But, I'm Checking Boxes

These behaviors and mixed messages bleed into everything humans do. We project our perception of social norms onto the things we create, whether it's a plane, car, or an Internet connected watch. I've engaged with a growing number of organizations this year that are thinking about security from a similar perspective. It's easy for executives to talk with each other and identify the methods they are using to secure their networks. Because security seems like such an intangible black box, it is difficult to quantify the actual return when a budget is assigned to decrease risk. Therefore, if executives see that their company's activities fall in line with the activities of other companies that have not been publicly compromised, all must be well!

However, as is often the case with simply checking boxes and moving on, reality is quite different. Home Depot, Target, and other major organizations subject to recent computer-based attacks were all subject to PCI compliance. This means that they were indeed checking boxes, validating patches, and scanning networks to ensure a decreased threat surface. And yet, they were compromised!

|

| Hackers gonna hack, AMIRITE!? |

Penetration tests can assist in providing a base line for infrastructure, but that baseline is simply a snapshot in time. Because of the constant change in the security ecosystem, today's scan will never be representative of tomorrow's network, even if the network components haven't changed. Security is a commodity whose value is constantly in flux, so evaluating risk on this action alone is not only misleading, it's devoid of value.

Penetration tests cost tens of thousands of dollars and may be performed once a month, or even once per quarter. Yet, if the security landscape of the organization is constantly at flux, these tests provide absolutely no insight into the real state of the organization and its assets. As a result, the organization is essentially flushing away hundreds of thousands of dollars down the toilet because it hasn't used the output of a penetration test effectively.

Realigning Organizational Strategy

This trend is even more of a concern when the Internet of Things trend becomes a part of every day business. As IoT systems become more ubiquitous in the workplace, new threat models, assessments, and controls must be put into place to identify how to monitor, manage, and deploy these assets. If you thought Bring Your Own Device was bad, consider Bring Your Own Anything.

In several cases over the past year and a half, clients have had difficulty seeing the value in evaluating the architecture of their IoT product or service. The executives I've spoken with have had concerns about the cost of such a review, which is understandable. When you pay money for a service that has a seemingly intangible return it is difficult to pull the trigger. This is especially true if you misunderstand your team's security experience and believe that patching and penetration testing has anything to do with threat modeling and architectural strengthening.

The flip side of the coin exposes the other executives I've spoken with. These executives understand the value of a security review and believe they need it. However, they are having trouble allocating budgetary resources toward security review because it is too early to allocate funds for consultants on an intangible piece of the technology puzzle. These executives know the value, but are having trouble selling the value upstream because start ups have limited resources and must work at a fast pace.

|

| Doozers solving architectural security concerns (clearly). |

These are all understandable problems that I actually sympathize with. It's difficult to understand what a valuable security practice is without having gone through the process of incorporating one into a product or service. It's even more difficult to incorporate a valuable security practice when your entire seed budget or A round is focused on boot-strapping a proof-of-concept that will get you partnerships in key verticals, or access to a larger client pool.

Bottom Line it For Me

Let's go over some numbers, shall we? Maybe this will help elucidate the actual return of a security program integrated into product or service, either being developed or being used.

On average, the cost of a penetration test is Man Hours multiplied by the days needed to scale for the scoped infrastructure, plus overhead, reporting, and on-site requirements. Let's start out with a simple example of Average Start Up (ASU). ASU has an average start-up size of twenty people. They have a small in-office datacenter, cloud infrastructure, and hosted physical servers in two separate locations (east coast and west coast). This is Pretty Average.

Let's say ASU is smart and knows that the penetration testers are skilled, estimating that it will take them 2-3 days to compromise the network. ASU wants data from this project, so they need a well written report and assistance interpreting the data correctly. Add another 2 days of work. So, let's give the project a full five business-day scope. Two penetration testers are engaged in the process to minimize the time required to scan and evaluate the entire infrastructure. Consultants work a flat eight hour day. The industry average is around 250 USD per hour. But, since ASU is a start up, let's presume they get the "friendly" introductory rate of 150 USD per hour. So, we're talking:

Days = 5

Hours per day = 8

Consultants = 2

Price Per Hour = 150 USD

Days * Hours * Consultants * Price = 12,000 USD

Keep in mind that the 12,000 USD is only to obtain the results of the penetration test. Once personnel at the organization implement the changes required to "pass" the test, a new test must be performed. Let's just presume for now that this second test is free, since Pen Test Company (PTC) is running a special for Start Ups like ASU.

But wait! There's more! ASU gets the benefit of the re-test that gives them a passing grade. But, they keep hearing about all these new vulnerabilities coming out of the wood work: LZO, LZ4, HeartBleed, BashBug, etcetera.

And what's this about some bizarre new RSA 1024bit key hack thingie?!? Is that even real!? How do we test for that?!

Ah, yes. Now that penetration testers have to come back. If it's once per quarter, now we're talking about 48,000 USD per year. If it's once a month, which is a more realistic number to get a somewhat reasonable analysis, now we're talking about 144,000 USD per year. Unsustainable.

At this point executives are rightfully pissed, and probably feel pretty shitty about where their money is going. The security process seems unmanageable, and the money feels burned.

Stop Killing Money

There is an easy way to stop this ridiculous cycle. It starts with a simple threat model, and ends with processes and controls that integrate security into not only the daily engineering process, but the work place. Every technology can be hacked. My work is proof of that. I have exceptional colleagues that are proof of that even more than I am: Charlie Miller and Chris Valasek, Zach Lanier and Mark Stanislav, Stephen Ridley, Thaddeus Grugq, Ben Nagy, and countless others.

Yes, everything can be hacked. But, there is a method for reducing the potential for risk and managing it in a cost-effective manner:

- Identify key assets that affect the business, its partners, and its customers

- Prioritize assets by potential effect

- Build security goals around these prioritized assets

- Define policies and procedures that support security goals

- Ensure infrastructure supports security goals

- Monitor infrastructure constantly, assign responsibilities

- Integrate security engineering into the product and/or service life cycle

This simple seven step process will take a company from zero to hero far faster than they ever could with penetration testing engagements or managed security services. Let's look at a simple engagement for a consultant to walk ASU through this process.

Asset review/Priority meeting/Initial Threat Model = 5 days - 10 days avg.

Defining policy and procedure/Enhancing infrastructure/Building baseline = 5 - 10

Define monitoring system/Assign responsibility/Add OSS controls = 5 - 10

Integrate security into SDLC (only if org has engineering dept) = 10

Average consulting rate = 250 USD per hour

Total High Watermark = 80,000 USD

Total Low Watermark = 50,000 USD

A single threat modeling and architecture enhancement engagement will cost between 50,000 USD and 80,000 USD. It typically only needs to be performed once. A solid architecture not only enables the organization to diminish its risk, but it helps the organization understand how to manage its security in a way that provides longevity. When a threat arises, the organization will be better equipped to respond effectively, rather than relying on an external organization to swoop in and solve the problem for a six figure price.

In addition, internal penetration testing capability and vulnerability assessment automation can be integrated into the process defined by this engagement, allowing the organization to not only audit themselves but to interpret the results of the audit effectively.

For an organization the size of our example, ASU, they would come in at the low end of the price spectrum. Even at the high per-hour rate of 250 USD, the project would still come out only 2,000 USD above the quarterly penetration testing price, but with a far greater return on investment! If ASU negotiated the price down to the same rate as the penetration testing team, 150 USD per hour, the cost would end up at 30,000 USD. This is only two and a half times the price of an average penetration testing engagement.

Failure Shouldn't Be Feared

Will organizations get hacked? Of course. Will an organization with a well defined security practice still get hacked? Unfortunately, it is likely. But, will an organization with a well defined security practice identify, isolate, and expunge the threat quickly and effectively with far less risk to the business and its clients? Yes!

The security process is not perfect. But, that is no reason to allocate resources to the wrong activities, then argue that we did the same things everyone else did when a risk is abused. Instead of learning to do the wrong things, we must be brave enough to do the things that are harder, sooner.

If we learn these lessons, we'll be able to decrease risk not only in our working environments, but within our products and services. Failure isn't something to be terrified of. We must integrate the lessons learned into our processes to ensure that we are less likely to fail, instead of shaking our head and presuming that isn't going to be me.

More importantly, when companies get ready to IPO or become acquired, lawyers are telling us that it is becoming increasingly more common for security audits to be a forced part of the process. There have been more than a few cases in the past several years where companies were being evaluated for acquisition and failed because the security architecture was so unmanageable it would require a complete architectural overhaul. This is not how you get a shiny new red 1993 Porsche 911.

|

| Success is a mobile phone. |

Everyone fails sooner or later, especially in information security. Pretending like the underlying problem doesn't exist is the same as putting black bands on a Poison cover album and saying you've saved the innocence of American teenagers. The real sign of success in an information security program is how quickly you recover, how effectively you can isolate risk to your business and your clients, and how much your customers trust your transparency.

As it happens, Lab Mouse is running a special discount on threat modeling and architecture security engagements for start-ups and small businesses! If you're in need of security services, please reach out! I will be happy to provide you with a valuable engagement that scales according to your budget.

Best wishes,

Founder

Lab Mouse Security