Why I Caught Pokemon All Day Long Today

Most of y'all know by now I've got a four week old little man by my side 24/7, and it's the best thing ever. It also means that almost 100% of my time consists of: feeding, burping, changing, sleeping, or hacking. The precious free minutes I get are spent at the gym or running in the park, just to keep in some semblance of shape. This is why I was thrilled when PokemonGO came out.

Aside from simply being fun as balls, it's a fun game I can play with my baby even though he has no idea what is going on. We can wander around the park, our neighborhood, or wherever, doing something. While a childless reader may think to themselves "why not just walk them around without Pokemon?", there is an important reason: boredom.

|

| Fun. As balls. |

While my baby boy is amazing and I love him far more than I could ever imagine loving a tiny creature that can't even really see me, there is only so much you can do with a baby this little. So, when you're walking around the park or the `hood, you can only explain trees, flowers, birds, and squirrels so many times. It gets repetitive.

Enter Pokemon GO.

Now, I can still tell him all kinds of things about nature and life, but we can do something else, too. It provides a nice contrast and an escape, while getting outside and walking around together. It introduces a cute randomness into an hour out of our day and that's great.

This is why I was really annoyed with certain personalities from the information security industry getting all touchy on Twitter today about the latest in Poke-tech.

The Infosec Team Rocket

Pokemon GO had been getting a bad rep in the infosec scene for a few days even before today's Google permissions explosion. People's irrational (and sometimes rational) fears have been the major focus of the issues discussed on Twitter. I won't bother listing or debating them here, though some of them are a real part of a Pokemon GO threat model, and I'll discuss them later.

|

| Dammit. |

But, the real

rain on everyone's parade came today, when it was discovered that the Pokemon GO app requests permissions from a Google account that far exceed what it needs to function. And yes, that is an oversight and must absolutely be corrected. In fact, Niantic

has already released a statement about their interpretation of the issue. The following sentence from the statement is, perhaps, the most important one:

Google has verified that no other information has been received or accessed by Pokémon GO or Niantic.

This assertion by Niantic claims that Google is verifying that Niantic has not abused its access to Google user's accounts. And this was the primary reason why I wasn't concerned: Google isn't a stupid company. They have exceptional security engineers, many of whom we are friends with, either directly or indirectly. They also have a strict permissions model and monitoring subsystem for applications, to identify if there are (or were) abuses. If Google is backing Niantic's claim that no abuses have occurred, I believe them.

But, for a minute here, let's ignore what is likely to have happened, and look at what could have happened.

Playing What If

The reason why so many researchers were e-screaming their digital heads off today was because of the what if factor. What if an adversary compromised a phone with Pokemon GO loaded on it and captured the OAuth token that granted full access to the Google account? What if an adversary or insider was able to compromise the back-end database and usurp a massive cache of Uber-Mode security tokens? What if Psyduck came to life and actually entranced the Niantic and Google security teams and made off with all their juicy security tokens? What if?!?!

|

| Madness and Indecision |

First of all, this is why there are stringent security controls on iOS, Android, and any other modern mobile platform (including the Lab Mouse Security HarvestOS, which will be publicly discussed in the coming weeks). Platform security controls are supposed to disallow a malicious app from accessing security tokens for a separate app. In theory this model works. In practice, it sometimes works, but it depends on the platform. iOS is much better about application security and cross-application attacks. Android, however, is less successful at security in this area, but still does a sufficient job in most cases.

If I were using an up-to-date Android firmware on a fairly modern device, I would feel mostly secure. If I were using a modern iOS device, and an up-to-date iOS image, which I am, I will feel pretty damn secure about playing a damn video game. An attacker subverting valid and unaltered system controls on these platforms is unlikely because of the level of expertise required in accomplishing the task. If the hacker is targeting me personally because I am a known infosec personality, and they are sufficiently skilled, there is probably little I can do to thwart them. So it goes.

More importantly, the people upset about this part of the threat model forget one key fact: anyone skilled enough to bypass platform security controls at this level can do a lot worse than snatch an OAuth token for a stupid video game app. They can manipulate the entire phone, and usurp its functionality to control the Apple or Google account, anyway. So, by that token, who gives a shit? Your entire phone is owned anyway. The Google account is probably the least of your worries, not to mention that if you are using an Android phone, or an iOS with Google Apps installed on it, an OAuth token with high privileges probably already exists on your device.

|

| Break that Jail, Ash! |

The other part to this argument, which is valid, is with respect to jailbreaking. For those unfamiliar, jailbreaking is the process of subverting a smart phone's security in order to run custom firmware. There are valid reasons to do this: freedom of choice, breaking out of regional restrictions, accessing unofficial apps, and subverting carrier controls. Yet, the user is knowingly subverting the platform's security model. That is what jailbreaking is. So, if the user is knowingly doing this, they are putting themselves at risk of decreased security by invalidating the controls used on the platform. Malware attacks, hacks, spyware, and other issues are all a valid concern for any user with a jailbroken phone. So, again, if the user is just worried about their damn Pokemon GO app, they are thinking incorrectly about their threat model.

The final argument, which does have merit, is in regards to so called "un-tethered" jailbreaks. An un-tethered jailbreak is a jailbreak that can occur without physical access to the phone. In other words, it can be used as an attack. A user that hasn't kept their phone up-to-date with the latest firmware image may be susceptible to an un-tethered jailbreak attack by visiting a malicious website, or some other means. This attack can render the device jailbroken, and may allow an adversary to remotely control the phone. Yet, again, this is an attack on the phone itself and not on Pokemon GO. So, these users are susceptible to far worse compromise than their stupid video game app.

Building a Legitimate Threat Model

So, now that we have a better understanding of Twitter's concerns, let's take a look at real concerns with Pokemon GO. Here is a brief and messy threat model:

Application Based:

- Login Credentials (Google or Pokemon Server)

- Application Secure Storage

- Communication Security

API Based:

- Credential Leakage

- Metadata Extraction

- Authentication and Authorization

- Partners

Game Based:

- Physical location tracking

- Trainer to Human conversion

- Baiting

Application Based Risks

In all honesty, this is the part of the model that I am

least concerned about. Yet, this is the part that Twitter has been

most vocal about.

Login Credentials

Regardless of which server type is used, critical data can be extracted. Since presumably more kids are associate with Pokemon server accounts, their personal information must be sufficiently guarded. It isn't OK to trivialize the Pokemon server tokens while exaggerating the Google ones. Both must be protected as both tokens potentially expose critical data.

However, to access the token, we know that:

- Smartphone security must be subverted by an adversary

- Back-end security must be subverted by an adversary

- Communications security must be subverted by an adversary

As described above, smartphone security is a risk. Yet, actually exploiting the risk is difficult for the set of users that don't have out of date firmware or ancient phones. All users should update to latest firmware images, sharply decreasing the risk of an attack.

While I cannot speak for Niantic's internal security, Google is now known to be assisting in monitoring the data extracted from the OAuth tokens. This will at least help identify if abuses do occur, and will allow Google to assist in prevention. This decreases the potential for back-end or internal abuses. For users with long-term concerns, they can simply invalidate the OAuth token by logging into their Google account and revoking access to Pokemon GO in their security settings.

Communications security is another story. There are already reports of users abusing TLS in the Pokemon GO app because of a lack of certificate pinning. Niantic should review their TLS deployment to ensure there are no potential abuses that could result in an attack against communication between smartphones and the Niantic servers. But, for now, there is no known MITM attack, only attacks where the app is instrumented by a researcher.

Application Security Store

If an app uses the underlying application security mechanisms provided by the platform, and does so in accordance with the

platform's security guides, the application has done the best it can do. If the application

has not adhered to these recommendations and guides, malicious applications

may be able to subvert programmatic flaws in order to gain access to useful data. Yet, again, this would require

direct access to the device by a malicious application or a physical user.

Communications Security

Yes, this is important. Certificate pinning

should be used. It, apparently, is

not currently employed by the Niantic app. As of writing this blog there are currently no reports of off-device MITM capability between the endpoint and the back-end servers.

API Security

This is the second most important part of the threat model. The state of the Niantic API is unknown. Aside from there being no reports of security failures in the TLS implementation (aside from certificate pinning) there is little information about the actual protocol and how the API validates requests issued against it.

Since there is no information about the Niantic API, and I am not going to go pokemoning around their services to determine if they are weak (as I don't have permission) this part of the threat model is currently marked <acceptable risk>.

This is a very important concept, "acceptable risk". This is the idea that you know there may be a security risk, but you choose to accept it and move on with your life. The fact is, any of the services we use could, at any time, be at risk of manipulation by an adversary. And, the fact is, most of them are at risk many times throughout our lives without us knowing about it. Flaws happen, and they are going to happen. That's just how the world works. Until that changes, unknowns that we cannot compensate for take on one of two forms: accept the risk or reject the product or service. In this case, I choose you, Pikachu.

Physical Security

This is the most important aspect of the Pokemon GO threat model, and, perhaps, not for the reason the reader might presume.

The security of the physical endpoint is masked by the controls of the platform and the controls of the application. Since Pokemon GO has no physical component other than the smartphone itself, there is no specific hardware identifier that is associated with a Pokemon Trainer in the game. Rather, the application identities are associated with metadata that may have a relation to a physical device. That is all a part of the unknown API. Yet, those translations are all a part of the hidden abstractions that are presumably secured via TLS and Niantic's back-end services security model. We shouldn't have to worry about them, and if we do have to worry about them, the entire security model is broken.

There is a much more fascinating angle, however: Bluetooth.

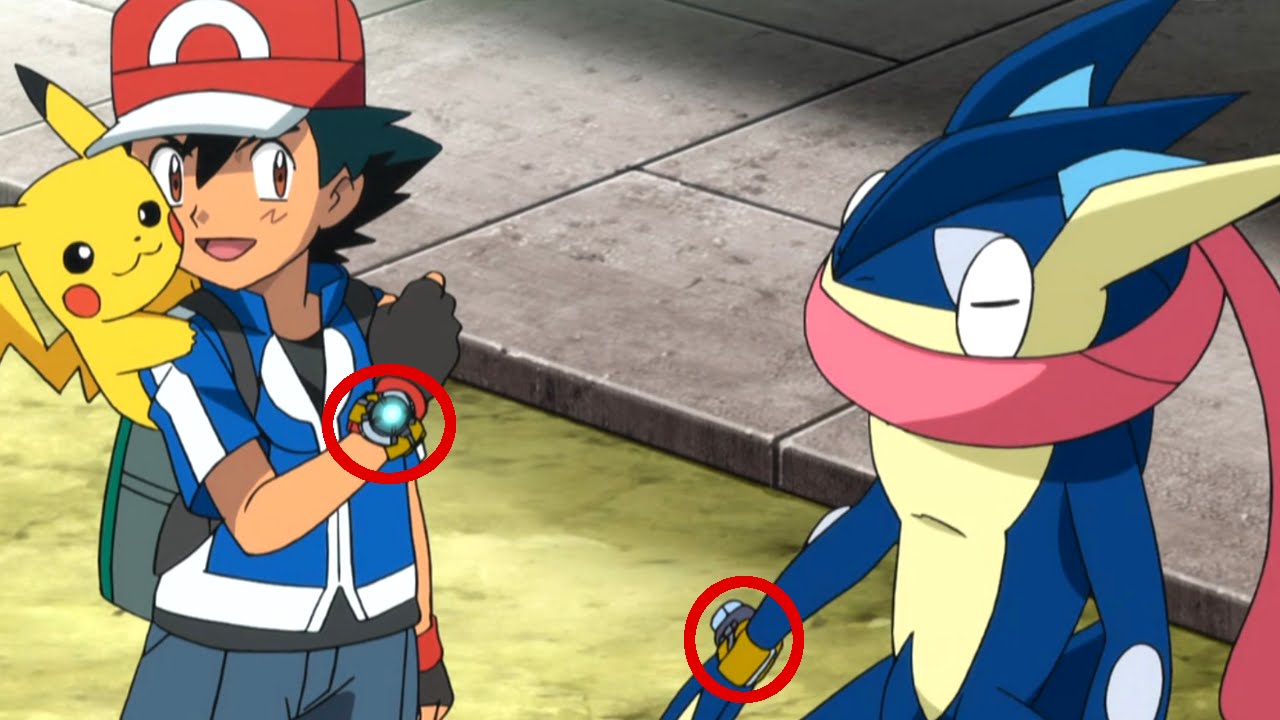

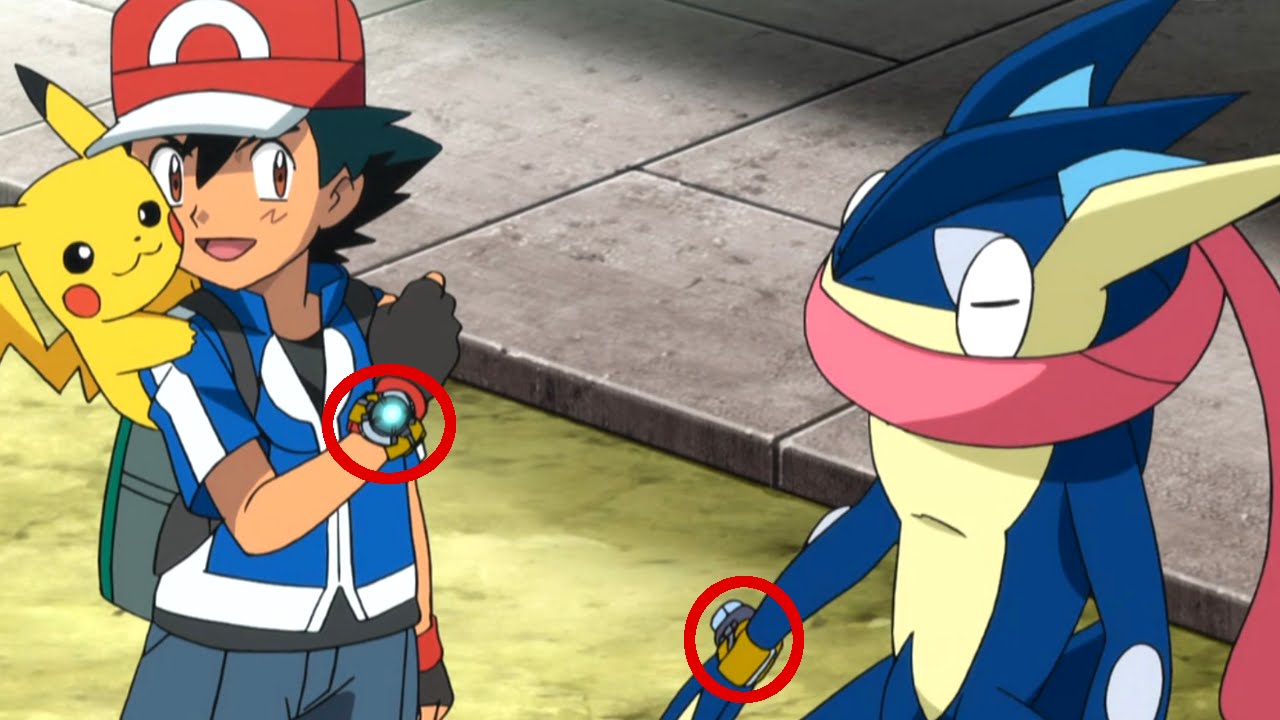

It has been reported that Pokemon GO will include a Bluetooth wearable device that synchronizes with the game. This device would light and/or buzz when a Pokemon is nearby. This allows the user to walk around without having to constantly stare at their phone. Instead, the user can interact with the wearable device, or choose to look at the smart phone only when an event has occurred.

Yet, the device would use Bluetooth Low Energy (BLE) to communicate with the phone. BLE's range is surprisingly long, as I recently found out in designing and manufacturing

Lab Mouse Security's custom BLE module. In addition, the BLE wearable must be able to synchronize with the endpoint. If the wearable isn't paired because the phone is off or sleeping, it may emit a beacon identifying what it is. This, and the BLE radio address (MAC), may allow an adversary to physically track the device in the real world. This would allow an adversary to sit at a Pokemon Gym intercepting BLE addresses, then tracking those addresses back to physical locations (homes).

This is a legitimate concern not just for me, but for the entire Bluetooth SIG. To combat these problems, the SIG has made enhancements to the BLE spec. One of these enhancements is generating a random MAC so that a device cannot be tracked. A unique MAC can be used to communicate with other devices, ensuring that the device cannot be linked to a specific user. However, this MAC isn't autogenerated and renegotiated at every session, only sometimes. Plus, the radio firmware must support this feature. As a result, if a phone and BLE wearable are always on and paired, they will likely always use the same random MAC to communicate, negating the benefit of this feature. The feature is imperative and must be a part of the BLE spec, but its implementation must be heavily vetted to ensure that it cannot accidentally be turned into a trackable beacon.

|

| Beacons, yo. |

Another issue is the security token or public key used to negotiate a secure session. The Bluetooth SIG has added secure session negotiation using ECDH, which, again, is excellent. However, this only secures the negotiation of the session layer and doesn't actually reduce the threat of attack in the event that the core keys used are either guessable or static across a deployment of devices. If the key provisioning or personalization steps are flawed, the resultant set of BLE wearable devices will have flawed communication that can be intercepted and decrypted by anyone with the ability to attack a single physical device. Then, a simple radio trick can be used to perform a MITM attack against BLE communication. It is notable, however, that this attack requires a high level of expertise, time, and equipment, and is unlikely to occur.

And yet, if the same security token is always emitted by a BLE wearable in order to negotiate a connection with a smartphone or peer device, a simple and cheap ($20) BLE sniffer may be able to track the wearable device. Or, at least, map the device to its current MAC, then allow the adversary to track the MAC.

Wrapping it Up

Thus, when the wearable device does come out, I hope the Pokemon GO team at Niantic heavily audits the BLE device to ensure that security is properly implemented. This way, our super fun and awesome video game won't turn into a very simple tool for tracking kids back to their homes. But, with Google involved, I'm sure a plan is already in place.

But, for now, here is a set of recommendations for Pokemon GO users:

- Generate a Google account just for Pokemon GO, don't use a work or personal account

- Don't use the Pokemon GO app at your home, only in public places, to reduce the potential for stalking

- Don't use a jailbroken phone

- Keep your phone's firmware updated

- Never go to isolated places alone

- Never go to parks, poorly lit areas, or isolated locations at night

- Always Pokemon GO with a buddy

- Hide your Pokemon Trainer name when taking screenshots by using an image editing app, such as Photoshop

Keep catching them all!

Best,

Don A. Bailey

CEO / Founder

Lab Mouse Security